The Culling and the End of Empathy

I lost a week to work travel and so did not post my weekly bulletin updating the ongoing assaults on higher education. It is almost impossible to keep up even without a lost week, but I want to capture here some of the “highlights” of the last two weeks of governmental actions directed against institutions and students before turning to some larger thoughts—grim but, I think, urgent.

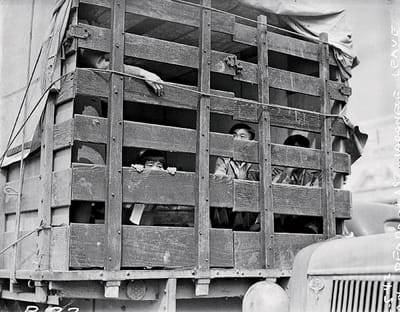

Two weeks ago I shared a list of all of the international students we knew of at the time who were under direct threat or already in custody due to the revocations of international student visas. Within a week of my last post the revocations of student visas went from seemingly targeted to utterly random—and broad. Over a thousand students have received notification from the government that their visas have been revoked, with others identified by university staff working through the central database in institution’s offices to check on their students. Inside Higher Ed has set up an invaluable tracker of this chaos, although any attempt to try and log it will be (inevitably) lagging. Keeping up and making sense of it all is, by design, an impossible task.

A recent survey suggests that interest by international postdoctoral students in attending U.S. institutions for higher education has already fallen by as much as 40%. We can expect these numbers to continue to plummet in the weeks and months ahead. In addition, there are clear signs that the U.S. will be hemorrhaging faculty and research projects as well. Three prominent Yale faculty recently announced they were leaving for positions at the University of Toronto. These include Jason Stanley, author of How Fascism Works: The Politics of Us and Them (2018), and Timothy Snyder, author of The Road to Unfreedom: Russia, Europe, America (2018). A poll of 1600 research scientists in Nature revealed that 75% are considering moving themselves and their research projects to Europe or Canada. Not surprisingly, European institutions are moving aggressively to recruit top researchers and their projects.

While the exact numbers are impossible to track at this moment, the scale of the financial blows to America’s research mission is truly overwhelming. A few numbers (no doubt out of date on the conservative side by the time I post this) underscore this point:

- More than $2.4 billion in NIH grants have been terminated

- Nearly $900 million in contracts that provide critical information on student performance and college costs have been canceled.

- A new indirect cost cap of 15% has been imposed on all NIH grant awards, further reducing the total funding universities receive.

- In the past week, every person I know who had been awarded a National Endowment for the Humanities (NEH) grant has had it canceled

- This past week Cornell University has faced a freeze of more than $1 billion in federal funding, and Northwestern University has seen a freeze of $790 million

- The NSF Graduate Research Fellowship Program which is a vital engine generating the next generation of research scientists has been cut in half

Of course, the funding cuts are only a part of the story of the devastation of research. Even those whose programs haven’t been directly targeted are wrestling with the consequences of the dismantling of the agencies which approve, regulate, and monitor research projects. As the guts are ripped out of these structures, even projects with secure funding grind to a halt, trapped in a bureaucracy that is no longer functional.

With 10,000 jobs being cut in Health & Human Services (HHS) and roughly 10,000 more accepting buyouts to leave “voluntarily,” the ripple effects will be felt for years to come. The breakdown of the Food and Drug Administration (FDA), the Centers for Disease Control and Prevention (CDC), and National Institutes of Health (NIH) are already adversely impacting health outcomes. Clinical trials are already being trapped in limbo, preventing potentially lifesaving drugs and treatments from moving forward towards FDA approval. New studies are unable to get off the ground as approval mechanisms are sabatoged. The National Institutes of Health (NIH) has canceled over 230 grants related to HIV research alone. Cuts mean fewer opportunities for graduate students, postdocs, and early-career researchers to engage in federally funded research. This threatens the development of the next generation of scientists, weakening the talent pipeline and making U.S. institutions less competitive globally.

America’s role as the leading engine of research innovation in the world is coming to an end through the direct actions of this administration. Perhaps the most immediate impact will be felt in Public Health programs at universities, where grant stoppages cuts are hitting disproportionately. As what Atlantic termed “Trump’s Revenge on Public Health” continues, the immediate impact is being felt in programs dedicated to managing HIV/AIDS:

Since January, the Trump administration has been dismantling that infrastructure. It has cut funds to hundreds of HIV-related research grants, forced multiple clinical trials focused on HIV to halt, and pulled support back from studies that include LGBTQ populations, still among those hardest hit by the virus. It has all but obliterated the President’s Emergency Plan for AIDS Relief (PEPFAR), the largest funder of HIV prevention in the world. It cut billions of dollars from federal grants to states that had been used to track infectious diseases, among other health services.

And this is just the beginning. The NIH Grant Terminations tracker, updated daily, shows the ways in which the cuts have especially targeted research areas such as HIV, trans health, gender and LGBT health, COVID, and vaccinations. Five years since COVID-19 killed upwards of 3 million people in 2020 alone, demonstrating clearly the necessity for increased public health funding and education, we are gutting the entire public health infrastructure of the nation—and with devastating impacts on the rest of the world. Not only will current programs supported by university researchers be permanently shuttered, but the survival of schools of public health themselves is in doubt. And generations of future public health researchers and workers will be lost.

One of the challenges of the moment is trying to make sense of the why? Why would the administration want to destroy its historic partnership with higher education that has made the United States research the envy of the world? Why would they want to risk the lives of millions by disappearing invaluable health data and shutting down research into everything from pandemics to Parkinson's?

It beggars belief that the administration would really want to risk the lives of millions around the world in the name of “efficiency” and “the war on woke.” As a result it is vaguely comforting to imagine this is all due to incompetence. Or just a bunch of tech bros misguidedly “breaking things” in the name of disruptive innovation. Or motivated solely by the desire for vengeance against Fauci or the agencies that have supported the vaccine research RFK blames for autism and which he claims have never been “safe and effective.” If any or all of these were true we could imagine that eventually an adult will enter the room and make these children clean up their mess. Unfortunately, the adults are in the room. And they are quite pleased with the chaos the children have wrought.

It is clear by now to most, I suspect, that none of this ultimately is about "efficiency" or “woke,” any more than the assault on our international students is really about “the fight against anti-semitism” or the dismantling of DEI programs is about "merit." These are all pretexts for larger, darker goals, which include, first and most obviously, the concentration of arbitrary autocratic power in a presidency modeled on from those of elected autocrats such as Orbán, Putin, Bukele, and Erdoğan. However even that remodeled executive power is all in the service of longer-term goals. That is to say, the loss of millions of lives as a result of the administration's actions is not a symptoms of incompetence but instead an explicit endgame. Mass death is not a bug but a feature.

The larger end goal is brilliantly described in Naomi Klein and Astra Taylor’s piece in Sunday’s Guardian, titled “The Rise of End Times Fascism.” The essay, somehow more unsettling than even its title suggests, is very much worth a close read. “The governing ideology of the far right in our age of escalating disasters has become a monstrous, supremacist survivalism,” Klein and Taylor write, describing something akin to a Brexit but on a planetary scale, in which the elite class are rejecting responsibility for anyone but themselves:

Inspired by the political philosopher Albert Hirschman, figures including Goff, Thiel and the investor and writer Balaji Srinivasan have been championing what they call “exit” – the principle that those with means have the right to walk away from the obligations of citizenship, especially taxes and burdensome regulation. Retooling and rebranding the old ambitions and privileges of empires, they dream of splintering governments and carving up the world into hyper-capitalist, democracy-free havens under the sole control of the supremely wealthy, protected by private mercenaries, serviced by AI robots and financed by cryptocurrencies.

This is not to argue that there is a unified vision syncing the policies and influence of Thiel, Musk, Miller, Bannon, Tump or any of the would be masters of their own dystopian domains. Instead we are facing what Klein and Taylor describe as a Frankenstein’s monster of outrageously well-resourced sociopaths who have made common cause around one key belief: it is time to end the social compact as we have known it throughout modernity. It is time to head for the bunker states that will replace nation states in the world they seek to hasten into being. And if there is one big challenge to this fantasy, it is not the severing of democratic traditions or the bonds of civic responsibility, already well eroded by years of propaganda, organized and freelance. The big challenge is quite simply this: there are too many people for a bunker-state world.

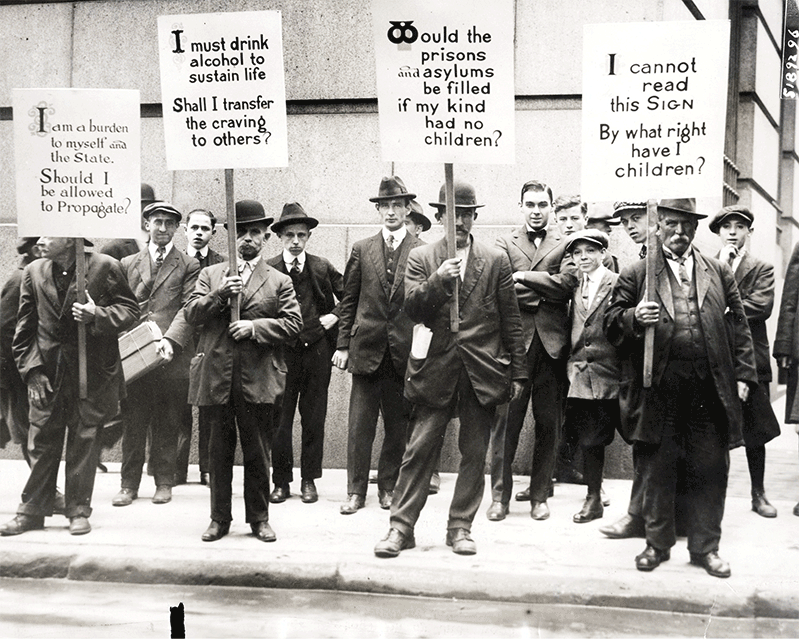

The problem of the outsized world population has long plagued thinkers on the right and the left who were convinced that democratic norms presented a danger to the future survival of their preferred version of humanity. While we tend to best remember progressive-era eugenicists for their obsession with genetics and the breeding of future human stock, most were equally concerned with the ways in which the sudden and remarkable advances in modern medicine and public health would extend the lives of those they saw as “unfit.”

As was discussed in Part 1 of the “History of Academic Freedom,” eugenicists were deeply entrenched in the highest echelons of higher education in the early decades of the 20th century—including Edward A. Ross, the Stanford professor whose 1900 firing would spark the drive for a systematic defense of academic freedom, and David Starr Jordan, the Stanford president who was ultimately forced to fire him at the behest of Jane Stanford. Indeed, most of the influential figures in shaping the "science" that would lay the foundations for Nazi ideology were employed by major U.S. research universities.

Charles Davenport taught biology at Harvard and the University of Chicago before accepting the position of director of the Cold Spring Harbor Laboratory, where he would found the Eugenics Record Office. Like other American eugenicists, he worried as much about medicine and public health as he did about immigration. As he argued in 1915, “the artificial preservation of those whom the operation of natural agencies tends to eliminate ... may conceivably destroy the race." For Davenport and many of his compatriots, the fundamental project of “racial hygiene” was undermined by advances in medical science and public health programs. This claim would be repeated openly across the field up to the outbreak of World War II, when it became an urgent national project to erase America’s leading role in shaping the fundamental logic of Naziism and the Holocaust.

In his 1925 Our Present Knowledge of Heredity Michael F. Guyer writes:

It is evident that if the weak, the insane, the feeble-minded, and the pauper were left to nature, they would die of disease, the rigors of the climate, or from the inability to secure food… But with our improved methods of sanitation, with our care of the sick, with our charities and philanthropy, we protect and foster such misfits, permitting them to grow to maturity and produce their like. In other words, we have… so eased the rigors of what the biologist calls natural selection that decadent stocks … are increased relatively faster than normal stocks. (219)

One could be excused for imagining Guyer to be an extremist voice in "jazz age" America. However, like Davenport, he was a respected academic—in his case a professor of zoology at the University of Wisconsin. And the views he shares in this volume were delivered in a series of lectures, as the volume’s subtitle proudly proclaims, at “the Mayo Foundation and the Universities of Wisconsin, Minnesota, Nebraska, Iowa, and Washington.” This was mainstream thinking in broad swaths of American political and intellectual society. While World War II would lead to the disavowal of public declarations in favor of withholding medical care and public services in order to kill off populations of "misfits" deemed “unfit,” the thinking—and the conversations—never ceased. They simply moved to the outer edges of political discourse—to be branded "kooks" by the public-face of post-war conservatism William F. Buckley, even as he and his fellow mainstream Republicans maintained ongoing collaborations with those they had claimed to exile.[[1]]

[[1]]: For a fascinating history of post-war conservatism’s dance with fascism, see Taking America Back: The Conservative Movement and the Far Right (2024) by David Austin Walsh.

The kind of thinking articulated in mainstream academic eugenics into the post-war era was muted but never exorcised from American politics—as we can see in its return to the megaphone in 2025. Nor was it immediately excised from establishment higher education. Into the 1970s, attempts were made to redeem eugenics from its past associations with genocide by focusing on the encouragement of positive traits rather than the suppression of “negative” ones. This was especially the project of Frederick Osborn, who represented its respectable face during the post-war years. Of course, many of the research projects conducted under this rationale—while more diplomatic in their framing than Davenport and Guyer a half-century earlier—remained fundamentally committed to the premises that some lives were more worthy (of saving, of reproducing) than others.

Eventually neo-Malthusian narratives displaced the more explicitly eugenic arguments, reviving anxieties about a global population on the verge of outstripping its resources. Stanford researchers Paul R. and Ann H. Ehrlich’s The Population Bomb (1968) was the most sensational in a series of titles in the 1960s and early 70s focusing on the consequences of having failed to cull the herd in previous generations. Here the “crisis” was addressed on a global scale not in the language of “race” and “fitness,” but of population control. The Ehrlichs' Population Bomb had been written at the encouragement of the Sierra Club, the pioneering conservation group with its own ties to historical racism and eugenics through its founder John Muir and Stanford President David Starr Jordan, who served as its director for over a decade.

By the mid 1970s, even this version of academic eugenics— the death of “the white race” now replaced with the extinction of humanity—was fading from academic discourse. Many of The Population Bomb’s predictions were undermined almost immediately, as what would prove a longterm downward turn in the global population growth rate began right after the book’s publication. Perhaps more important in defusing the force of the “population bomb” narrative was the “green revolution” (a term first used in 1968, the year of the Population Bomb’s publication), which spread American agribusiness high-yield grain crops, irrigation systems, chemical fertilizers, and pesticides to parts of the world where food was scarce. While today we are of course keenly aware of environmental and other costs of this “revolution,” at the time it presented a strong counter-argument to the Ehrlichs, suggesting that modern technology could feed a world supposedly on the brink of starvation.

As with the breakthroughs in modern medicine in the first decades of the 20th century, the breakthroughs in farming and food production in the final decades represent direct threats to the fundamental dream of eugenical thinking—the dream that scarcity and existential threat would provide the occasion for setting aside sentimental liberalism in favor of hard choices as to who should be saved. While there was some critique from the dwindling ranks of eugenicists that the green revolution represented a dangerous break with the natural order, it was muted. By this time the mores of academic discourse had changed markedly, especially after the turmoil of the 1960s drove many conservative academics out of the university and into the expanding parallel universe of rightwing think tanks.

If this discourse disappeared from much of academia, it did not disappear from political discourse—even as it moved more to its fringes in terms of public declarations. Nor did it disappear from the new parallel universe of well-subsidized think tank research. For example, the Pioneer Fund was originally founded in 1937 to disseminate eugenics and to encourage the propagation, in their own words, of people “descended predominantly from white persons who settled in the original thirteen states prior to the adoption of the Constitution of the United States.” The organization had deep ties at its founding to the Nazi party in Germany, and it maintained a commitment to funding research into “racial betterment” into the 21st century.

Its most famous sponsored project was Richard J. Herrnstein and Charles Murray’s The Bell Curve: Intelligence and Class Structure in American Life (1994). Among other claims, Herrnstein and Murray argued that it was differences in intelligence, rather than racism or lack of opportunity, largely explain racial inequality in America, and claimed to provide statistical data to prove that people with low cognitive ability are increasingly overrunning the population in the United States.

Herrnstein, who died shortly after the book was published, was a biologist at Harvard. Murray, however, had spent his entire academic career in the shadow worlds of think tanks and private foundations. By the time he received his PhD in political science from MIT in 1974, higher education was perceived by many conservative thinkers—especially those defending the "science" of racial hierarchy—as hostile territory. But there was little reason to be dissuaded from continuing this work if one could find, as Murray did, safe haven for conducting a scholarly career outside the unfriendly confines of the ivory tower. Murray began his career at the non-partisan American Institutes for Research, but by 1981 he had moved to the newly-established Manhattan Institute for Policy Research, a conservative center founded by soon-to-be Reagan CIA director William J. Casey.

*️⃣

One of the most prominent justifications for the current assault on higher education is the supposed lack of “intellectual diversity”—on today’s campuses. "Intellectual diversity" always refers to "political diversity," and to the supposed silencing of conservative research and ideas at universities. And yet the “evidence” for this assault has largely been ginned up by scholars who have lived much of their scholarly lives in think tanks like the Manhattan Institute, where political orthodoxy is literally a basic requirement for admission. Of course, when higher education’s critics bemoan the lack of "intellectual diversity" on today’s American campuses, much of what they are mourning is a university where research like The Bell Curve would be treated with the respect pre-war eugenics had been accorded.

At the time The Bell Curve was published I was in my final year in graduate school, working on a dissertation on racial science and early American literature. My immersion in the history of racial science had been focused on the 18th and 19th centuries, and so the arguments Herrnstein and Murray offered in The Bell Curve felt like aberrant atavistic voices I had thought long dead. But it was received similarly by most of my professors at the time, who recoiled at the book’s openly racist assertions. Within a couple of years, numerous essays and even entire books had been published countering the methods, data, and claims of the book.[[2]]

[[2]]: In the popular press, the response was predictably more intense, both on the part of critics and on the part of defenders identifying in the outraged response evidence of the unwillingness of liberal America to face “facts.”

Rereading the academic response from the 90s today, I am struck by its measured and scholarly approach, despite the fact that the claims in The Bell Curve were both objectionable and based on deeply flawed logical argumentation. The insistence that intelligence is primarily inheritable was and remains highly speculative at best. The insistence that IQ tests are an effective measure of what could be plausibly termed "intelligence" is at best deeply naive. That those two flawed assumptions are foundational to any meaning read into IQ results across racial and ethnic groups (distributed, “naturally,” across the titular bell curve) undermine from the start any “lessons” that might be applied from this research to social policy and public health terrifying.

Of course, lessons were being applied. Murray did not write his book for academics, nor was he writing it for a popular audience. The book’s primary audience—like that of so much scholarship sponsored by rightwing think tanks—was those directly responsible for shaping policy and legislation. That the book was a sensation—embraced and reviled—was a bonus, elevating the arguments to mainstream media attention. But its primary function was to provide academic evidence for policy makers eager to dismantle the social safety net.

We can trace a direct line from The Bell Curve to the “Personal Responsibility and Work Opportunity Reconciliation Act,” the Welfare "reform" bill signed into law by President Clinton in 1996. The amplifying and mainstreaming of doubts as to the effectiveness and ethics of social programs, affirmative action, and government interventions in support of the vulnerable found powerful and long-lasting ammunition in The Bell Curve, and numerous related work sponsored by similar research organizations that made up the right wing parallel to the nation's research universities. The sensation surrounding the book helped grease the slide into the world we are now inhabiting, demonstrating the political value of the shadow academia which is currently reshaping the university from without and within.

Murray spent more time after the book’s publication speaking at friendly research centers and gatherings of policy makers than at the college campuses he had abandoned after 1974. While there was some pushback to his visits to campuses in the late 90s and early 2000s, none prevented him from speaking as scheduled. Most pushback took the form of letters to the editor in campus newspapers and organized counter-events.

By the 2010s, everything had changed, epitomized by the infamous 2017 protest at Wesleyan University in which Murray was prevented from speaking and later confronted by masked protestors when leaving campus, resulting in injury to the Wesleyan professor who was to have interviewed him during the event. Protests continued throughout the spring and into the fall of the next academic year at institutions such as Harvard, University of Michigan, and Claremont McKenna College, although none reached the levels of disruption and violence as the March Wesleyan protest.

Murray’s sudden interest in 2017-18 in touring the campus circuit might seem strangely timed, especially given that his most recent books—including The Curmudgeon's Guide to Getting Ahead (2014)—were over two years old at the time. Of course, the timing was anything but accidental, following on the heels of Trump’s January 2017 inauguration. It was a tour designed to garner precisely the response on campuses that it did, and campus protestors were all too happy to provide grist for the mill in the run-up to what was to be Trump's second term assault on higher education.

That assault had to be delayed, of course, due to the election in 2020 of Biden. But the work continued in think tanks, policy centers, and in the halls of congress, where the “fringe” theatrically exiled by Buckley a half century earlier had worked their way to the center of the party. Now it was the Buckley-style conservatives who found themselves pushed to the fringes or driven out of politics by an increasingly radicalized base. If anything, the four year interregnum allowed time for planning and coordination of the blitzkrieg of Trump II’s dismantling of expertise and the institutions that accredited it, the remnants of already anemic social services for the most vulnerable at home and abroad, and the research and medical infrastructure that sustained the lives of vulnerable populations around the world.

Let me say plainly what I hope is clear. Millions of lives will be lost if this administration continues unchecked for another two years.[[3]]

[[3]]: Two years, of course, seems like the best case scenario, as the courts struggle to find means of holding the administration to account and GOP representatives live in fear of the consequences of breaking with the administration. And even that hope for some brakes to be applied in two years presumes a free and fair midterm election, something that feels far from certain.

The suggestion that millions of lives will be lost as a result of destruction perpetrated in two years’ time might seem hyperbolic, but I promise it is not. If we were to factor in the setbacks to climate change research and mitigation commitments, we are looking at numbers considerably higher. But even setting climate change aside (as we are all too comfortable doing), eliminating CDC’s Division of HIV Prevention alone could well result in over 143,000 new HIV infections and 14,000 AIDS-related deaths in the U.S. alone. According to the Foundation for AIDS Research, the impact will be considerably more devastating globally, with U.S. shuttering its international AIDS funding resulting in as many as 3 million deaths in the next five years.

And this is just the consequence of cuts to one vital component of our medical, research, and public health enterprise. One doesn’t have to look deep into the list of canceled funding to start envisioning the toll in human lives. And, again, this is just direct cancellations of NIH funding. The destructive path is so much broader, and the human costs will play out for a generation, easily resulting in a higher global death toll than any human-engineered casualty event in human history. And unlike the Nazis during the Holocaust or the Khmer Rouge during the Cambodian genocide of the 1970s, the architects will retain deniability, however implausible, that they have anything to do with what is clearly “nature’s will.”

Responding in 1994 to the Bell Curve on The McLaughlin Report, Holocaust denier and “paleoconservative” Pat Buchanan blithely declared, "I think a lot of data are indisputable. ... It does shoot a hole straight through the heart of egalitarian socialism which tried to create equality of result by coercive government programs.” At the time even the show’s host John McLaughlin pushed back on the enthusiasm of Buchanan and his fellow panelists for the book’s conclusions.

In 2025, eugenics need not speak its name nor defend itself to exert more destructive power than ever it did in its official heyday. It is the policy underwriting everything, no longer articulated through the selection and breeding of "favorable" human traits, but now through a disavowal of responsibility for those who cannot survive the sudden withdrawal of aid and medicine, those who will not survive without the research that would have prevented or mitigated the next pandemic, and the planet that will not remain habitable without the global commitments necessary to forestall the worst consequences of climate change.

Last month Elon Musk declared war on "civilizational suicidal empathy.” “The fundamental weakness of Western civilization is empathy,” he said, describing it as the “bug in western civilization” that, unchecked, will lead to civilization’s end. In the logic of eugenics embraced by Musk and his allies, reproducing the best of the species no longer depends on genetics. Wealth, power, and the keys to the bunker are sufficient to ensure that only the fit will survive in this brave new world. And if the rest of humanity dies off, there will always be AI to keep them company.

Subscribe (always free)

Subscribe (free!) to receive the latest updates

Member discussion